The Growing Presence of Generative AI in Government Operations

State and local governments across the United States are actively exploring ways to implement generative artificial intelligence, such as ChatGPT, to improve administrative efficiency and public services. However, this rapid adoption has ignited debates on ethical implications, data security, and public transparency. Inconsistencies in the regulatory approach towards these advanced technologies are evident. For instance, the U.S. Environmental Protection Agency has prohibited employee access to ChatGPT, while U.S. State Department staff in Guinea are utilizing it for various tasks like drafting speeches.

The Mixed Bag: Different Approaches Across States

Various states display contrasting attitudes towards generative AI. Maine, for example, has imposed a temporary ban on the use of generative AI by its executive branch, citing cybersecurity concerns. In stark contrast, Vermont is encouraging its government employees to use the technology for educational purposes, such as learning new programming languages, as revealed by Josiah Raiche, the state’s director of artificial intelligence.

Local Governments and Generative AI: A Closer Look

Municipalities are also formulating guidelines to regulate the use of generative AI. San Jose, California, has drafted a comprehensive 23-page guideline document and requires municipal workers to fill out forms each time they employ AI tools like ChatGPT. Alameda County, not far from San Jose, opts for a more flexible approach. While they have organized educational sessions on the risks associated with AI, they have not yet established a formal policy. Sybil Gurney, the county’s assistant chief information officer, remarked that their focus is on “what you can do, not what you can’t do.”

Optimism Tempered by Accountability

Government officials acknowledge the potential of generative AI to alleviate bureaucratic inefficiencies. Yet, they also stress the unique challenges posed by the public sector’s need for transparency, adherence to strict laws, and the overarching sense of civic responsibility. Jim Loter, Seattle’s interim chief technology officer, emphasizes the elevated level of accountability required when implementing AI in governmental processes. He believes that public disclosure and equitable practices must be a priority, especially given the high stakes involved in government decision-making.

Scenarios Highlighting the Stakes: A Case in Iowa

Recent events have shown just how high those stakes can be. Last month, an assistant superintendent in Mason City, Iowa, used ChatGPT to help decide which books should be removed from school libraries. This incident, mandated by state law, attracted national attention and scrutiny, raising questions about the accountability of AI decisions in government.

Public Record Laws and Data Security

There is a further layer of complexity: public records and data security. San Jose, Seattle, and Washington State have alerted staff that any data used in generative AI models is subject to public record laws. This information is also used to train these AI models, increasing the risk of sensitive information being leaked or mishandled. Moreover, a study from the Stanford Institute for Human-Centered Artificial Intelligence points out that the more accurate these AI models become, the likelier they are to reuse large blocks of content from their training sets.

Challenges in Healthcare and Criminal Justice

Healthcare and criminal justice agencies face an additional set of problems. Consider the hypothetical situation where Seattle employees use AI to summarize investigative reports from their Office of Police Accountability. These reports may contain information that is sensitive but still falls under public records, adding a layer of complexity to their responsible use.

Legal Implications and Public Trust

In Arizona, Maricopa County Superior Court employees use generative AI for internal purposes, but Aaron Judy, the court’s chief of innovation and AI, admits there would be reservations about using it for public communications. The underlying concern is the ethical dilemma of using citizen input to train a profit-oriented AI model.

Generative AI in Civic Technology and Future Policies

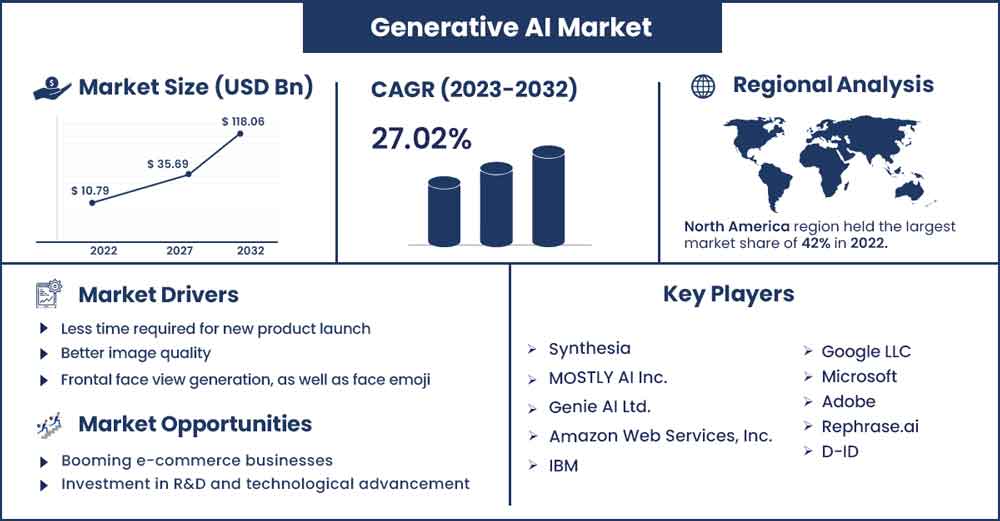

Major tech companies and consultancies, such as Microsoft, Google, Deloitte, and Accenture, are actively promoting generative AI solutions at the federal level. Cities and states are expected to refine and improve their policies in the coming months. Albert Gehami, San Jose’s privacy officer, predicts that these rules will undergo substantial evolution as the technology matures.

The Need for Federal Oversight

While local and state governments have made initial strides in formulating AI policies, there’s a pressing need for federal guidelines. Alexandra Reeve Givens, president and CEO of the Center for Democracy and Technology, believes the situation demands “clear leadership” from the federal government to establish detailed, standardized protocols.

Conclusion: The Balancing Act

As generative AI technologies become increasingly integrated into government operations, the challenge will be to strike a balance between leveraging their capabilities for public benefit and ensuring data security, ethical standards, and transparency. As governments continue to experiment and learn, close scrutiny and responsible implementation will be the keys to harnessing the full potential of this transformative technology.